Meta Description:

Qualcomm’s new AI200 and AI250 inference solutions aim to revolutionize data center performance with faster, more secure, and energy-efficient AI acceleration. But can they help QCOM outpace competitors like NVIDIA and AMD?

Qualcomm Expands Its AI Footprint

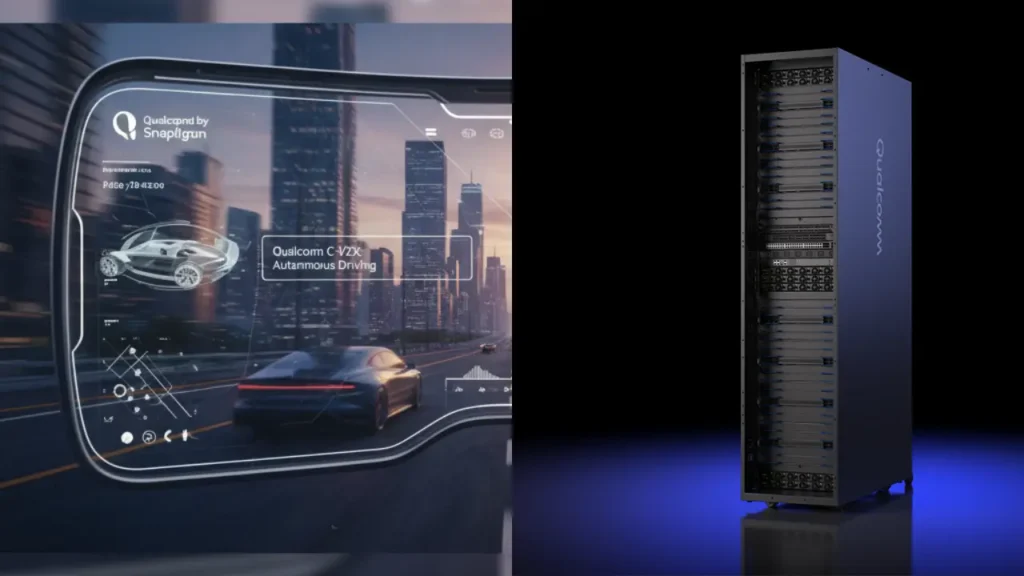

Qualcomm Incorporated (QCOM) has stepped up its presence in the artificial intelligence industry with the launch of its AI200 and AI250 accelerator cards and racks. These new AI inference-optimized solutions are built to enhance data center performance, using Qualcomm’s powerful Neural Processing Unit (NPU) technology.

Both products integrate confidential computing, protecting sensitive AI workloads, while the direct cooling feature boosts thermal efficiency. This combination positions Qualcomm as a strong player in the growing AI inference market.

AI200 and AI250: Designed for Real-World AI Applications

The Qualcomm AI250 introduces a near-memory computing architecture, delivering up to 10x higher effective memory bandwidth while reducing power consumption. Meanwhile, the AI200 focuses on rack-level inference—perfect for running large language models (LLMs), multimodal models, and other complex AI workloads at a lower total cost of ownership.

In today’s market, companies are shifting their focus from training large AI models to inference workloads—that is, actually using these models in real-time. According to Grand View Research, the global AI inference market, valued at $97.24 billion in 2024, is expected to grow at a 17.5% CAGR from 2025 to 2030. Qualcomm’s timing with these launches aligns perfectly with this rapid expansion.

Adding to its momentum, global AI firm HUMAIN has already adopted Qualcomm’s new solutions to deliver high-performance inference services in Saudi Arabia and across the world.

How Qualcomm Stacks Up Against Competitors

The AI inference race is heating up, and Qualcomm faces strong rivals such as NVIDIA (NVDA), Intel (INTC), and AMD (AMD).

| Company | Key AI Inference Products | Focus Area | Strength |

|---|---|---|---|

| Qualcomm (QCOM) | AI200, AI250 | Data centers, LLMs | Energy-efficient, secure, cost-effective |

| NVIDIA (NVDA) | Blackwell, H200, L40S, RTX | Cloud & data centers | Industry leader in speed & scalability |

| Intel (INTC) | Crescent Island GPU | AI inference benchmarks | Expanding GPU suite, MLPerf compliance |

| AMD (AMD) | Instinct MI350 Series | Generative AI, HPC | High performance, power-efficient cores |

While NVIDIA continues to dominate the market, Qualcomm’s focus on efficiency, affordability, and scalability could carve out a strong niche among cost-sensitive enterprises and data center operators.

QCOM’s Market Performance and Outlook

Over the past year, Qualcomm shares have gained 9.3%, while the broader semiconductor industry surged by 62%. The company currently trades at a forward P/E ratio of 15.73, significantly below the industry average of 37.93—suggesting undervalued potential.

Earnings estimates for 2025 remain steady, and projections for 2026 have slightly improved (up 0.25% to $11.91 per share).

With growing adoption of AI inference technologies and strong demand for energy-efficient data processing, Qualcomm’s new product line could help stabilize growth and position the company competitively in the evolving AI landscape.

Final Thoughts

Qualcomm’s AI inference strategy shows clear focus on scalable, secure, and power-efficient performance. While rivals like NVIDIA and AMD maintain dominance in certain segments, Qualcomm’s AI200 and AI250 may enable the company to grow steadily in a rapidly expanding market.

If executed well, these solutions could mark a key turning point for Qualcomm’s long-term growth prospects in the global AI ecosystem.